Recently, my team and I were working on a project that required integrating an LLM (large language model). Naturally, we wanted the best results. So it should be simple, right? Just grab the top model, plug it in, and go. But of course, it’s never that easy.

It turns out, choosing the “best” model is more nuanced than just performance. For one, performance is subjective—it depends heavily on your specific use case. But more importantly, cost becomes a major factor in the decision-making process.

As a consumer, you don’t really deal with this complexity. You’ve got three tiers: free, the $20/month subscription, and maybe the $200 Pro tier if you’re using something like OpenAI. Those guardrails help. You don’t need to decide between 10+ models. But if you're a developer? The world is your oyster—and that can get messy fast.

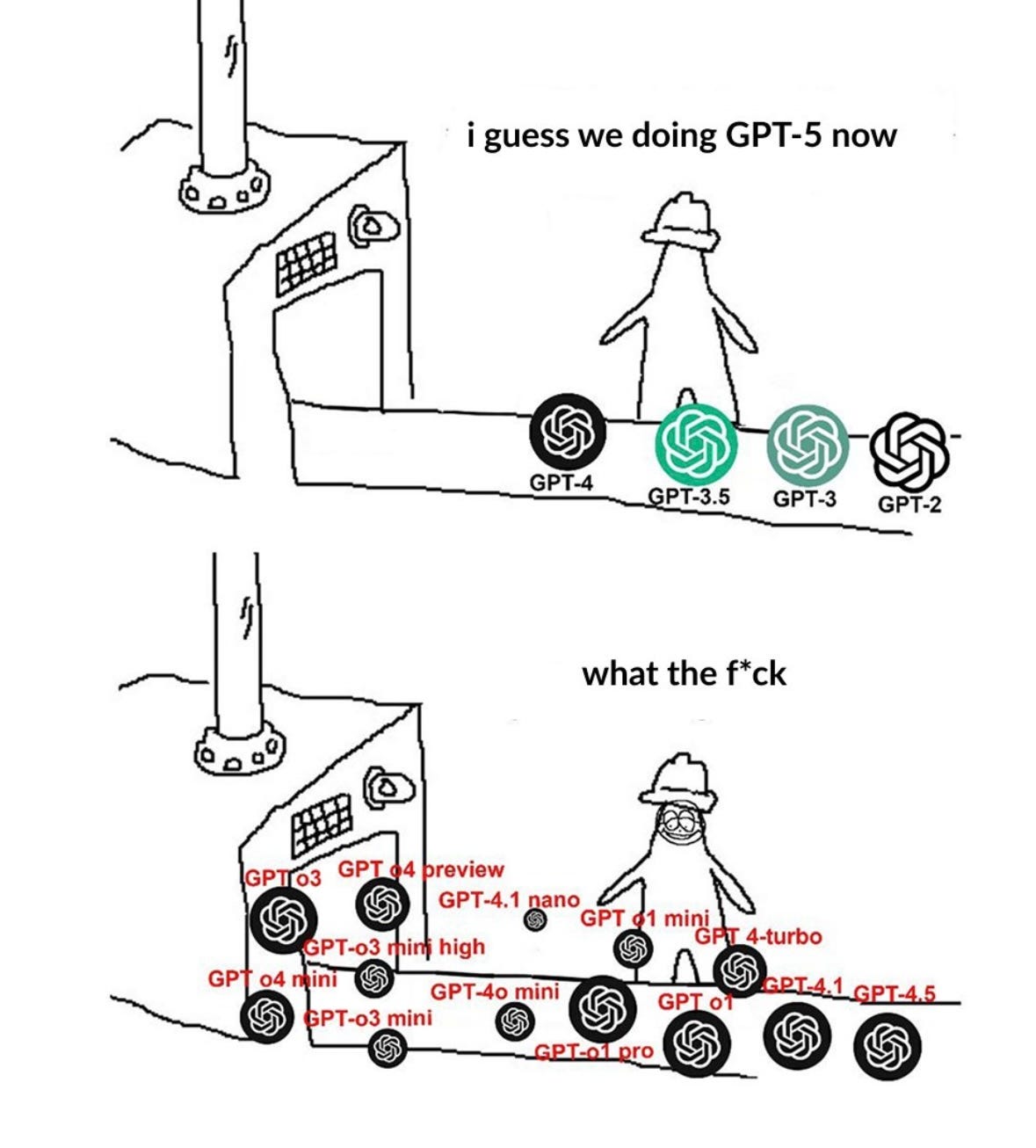

Take OpenAI, for example. Even within one company, you've got GPT-4.5, GPT-4o, GPT-4o-mini, high, and on and on. Each model varies in performance, latency, and price. So now you’re not just choosing a model—you’re weighing trade-offs in cost, speed, and output quality.

Tools like Cursor or Windsor help by letting you swap models easily. But even then, you have to ask: Do I really want to wait two minutes to re-run the same query on another model? Did I lose context in the switch?

We’re still in a phase where everyone’s trying to stake their claim in the space, and understandably so. But the challenge is: there isn’t one model to rule them all—yet. That’s both a good thing and a confusing thing.

Here’s the simple framework I use to evaluate which model to use in development:

Define evaluation metrics. What’s the model being used for? How good does it need to be? Forget general benchmarks—they may not apply. Set your own based on the task at hand.

Consider pricing and spend limits. What are the trade-offs? Is the model performance worth the cost?

Think long term. What’s the switching cost if I change models later? Am I betting on a provider that’s investing in rapid improvements?

Lastly, if you want a quick side-by-side comparison, I usually just ask Perplexity to generate a table comparing the models I’m evaluating. Saves time—and your sanity.

Events You Won’t Want to Miss!

QlikConnect | May 13–15 | Orlando, FL

I’ll be on site at QlikConnect doing executive interviews and diving into how Qlik is pushing the boundaries of data and AI. If you're curious about how BI and AI are merging in real time, this is the place to be.

👉 Join me: qlikconnect.com

Data Connect | May 20 | San Francisco, CA

Women in Data and Women in Analytics are teaming up for an unforgettable event in SF! I’ll be doing all the things—hosting a panel, leading interviews, and soaking up the energy from this incredible community.

💥 Save 25% with promo code: Womenindata

👉 Register: dataconnectconf.com